Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

The disagreeable behaviour of the kappa statistic - Flight - 2015 - Pharmaceutical Statistics - Wiley Online Library

![PDF] Computing Inter-Rater Reliability for Observational Data: An Overview and Tutorial. | Semantic Scholar PDF] Computing Inter-Rater Reliability for Observational Data: An Overview and Tutorial. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e3ee8537cead698052a101cd6c5925d08820f6f2/17-Table4-1.png)

PDF] Computing Inter-Rater Reliability for Observational Data: An Overview and Tutorial. | Semantic Scholar

Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification - ScienceDirect

![PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6d3768fde2a9dbf78644f0a817d4470c836e60b7/4-Table2-1.png)

PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar

The disagreeable behaviour of the kappa statistic - Flight - 2015 - Pharmaceutical Statistics - Wiley Online Library

![PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6d3768fde2a9dbf78644f0a817d4470c836e60b7/4-Table3-1.png)

PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar

Inter-observer variation can be measured in any situation in which two or more independent observers are evaluating the same thing Kappa is intended to. - ppt download

![PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/79de97d630ca1ed5b1b529d107b8bb005b2a066b/1-Figure1-1.png)

PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar

![PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/79de97d630ca1ed5b1b529d107b8bb005b2a066b/2-Figure2-1.png)

PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar

![PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6d3768fde2a9dbf78644f0a817d4470c836e60b7/6-Table5-1.png)

PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar

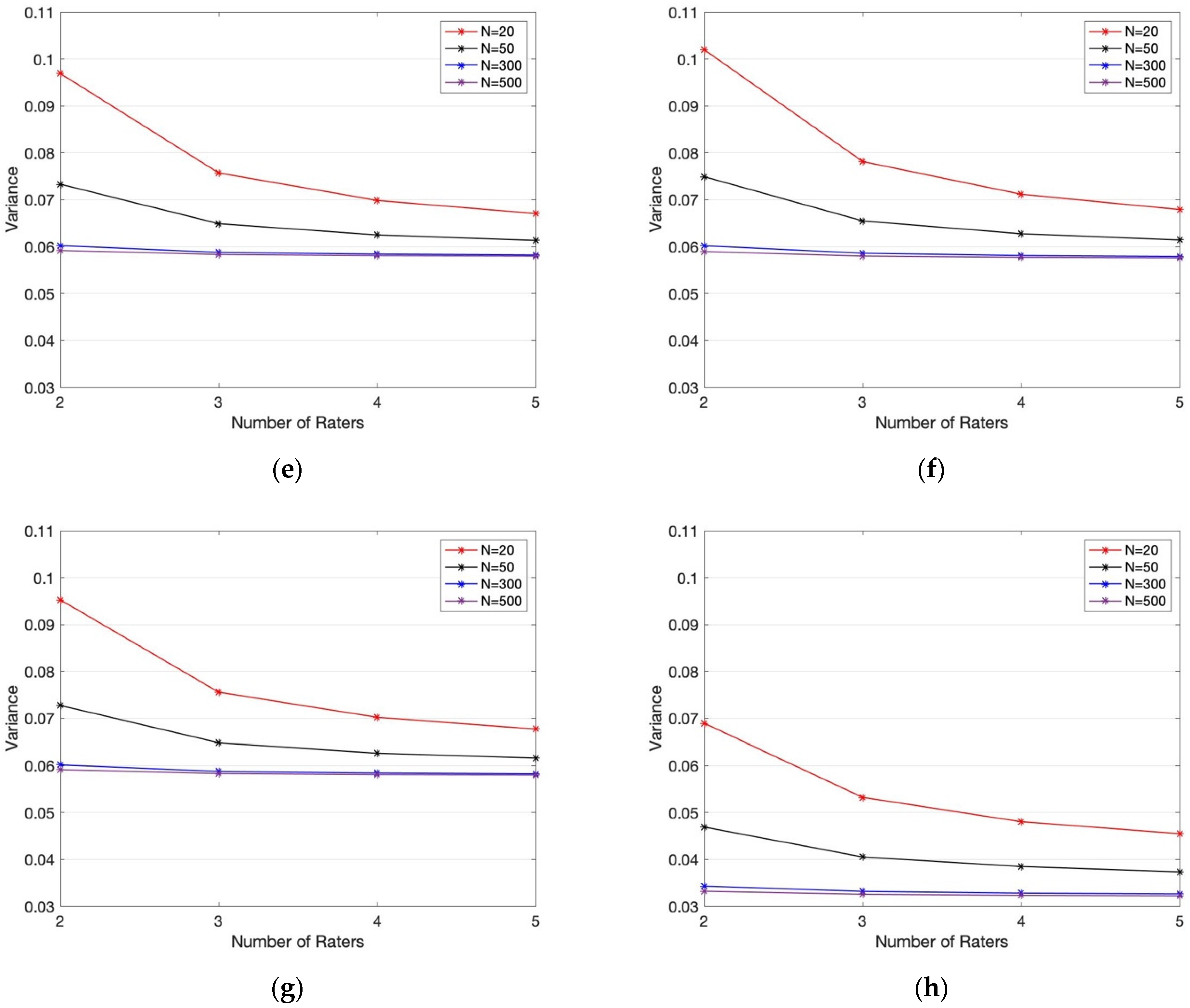

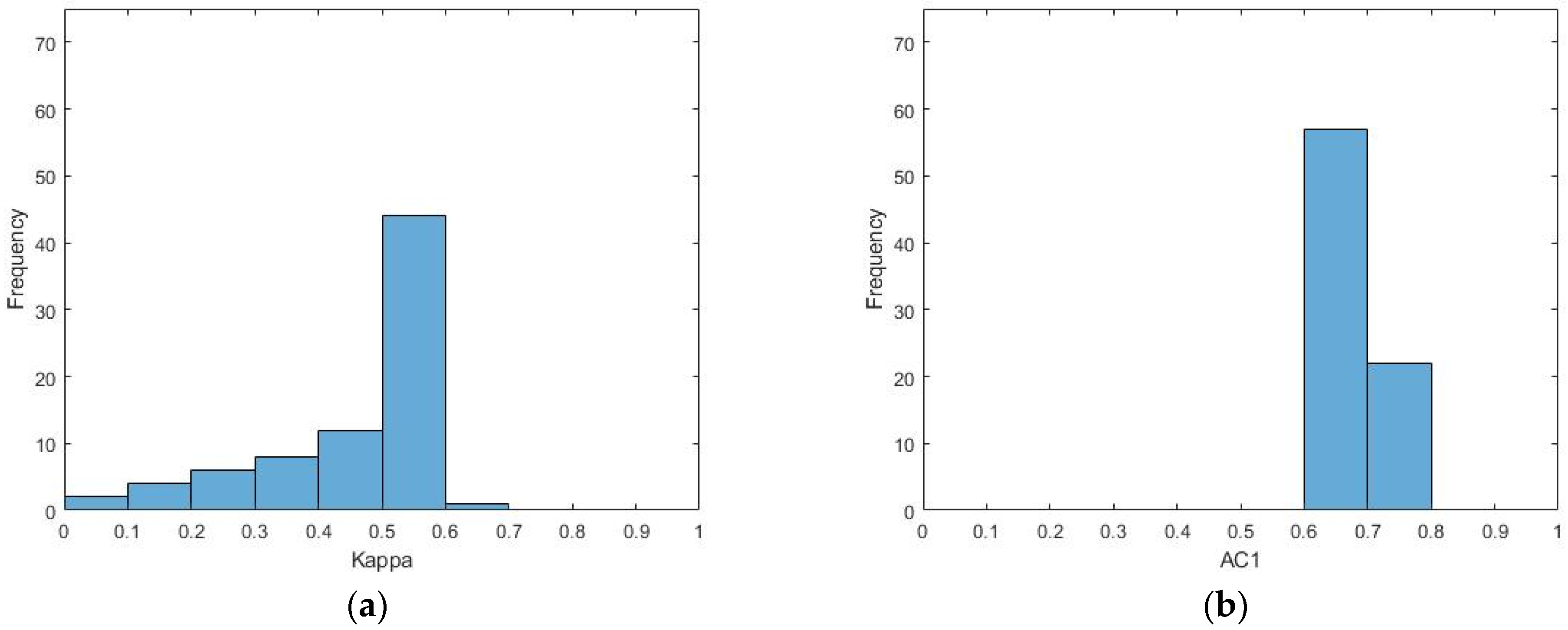

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters | HTML

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters | HTML